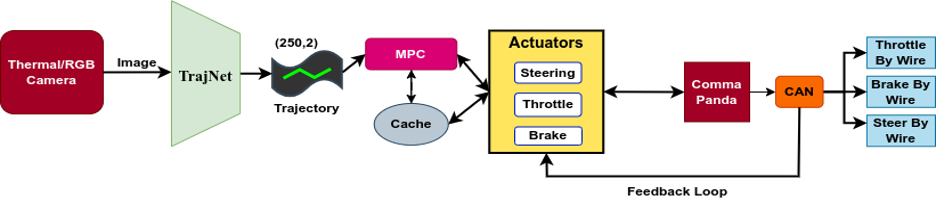

Achieving reliable autonomous navigation during nighttime remains a substantial obstacle in the field of robotics. Although systems utilizing Light Detection and Ranging (LiDAR) and Radio Detection and Ranging (RADAR) enables environmental perception regardless of lighting conditions, they face significant challenges in environments with a high density of agents due to their dependence on active emissions. Cameras operating in the visible spectrum represent a quasi-passive alternative, yet they see a substantial drop in efficiency in low-light conditions, consequently hindering both scene perception and path planning. Here, we introduce a novel end-to-end navigation system, the "Thermal Voyager", which leverages infrared thermal vision to achieve true passive perception in autonomous entities. The system utilizes TrajNet to interpret thermal visual inputs to produce desired trajectories and employs a model predictive control strategy to determine the optimal steering angles needed to actualize those trajectories. We train the TrajNet utilizing a comprehensive video dataset incorporating visible and thermal footages alongside Controller Area Network (CAN) frames. We demonstrate that nighttime navigation facilitated by Long-Wave Infrared (LWIR) thermal cameras can rival the performance of daytime navigation systems using RGB cameras. Our work paves the way for scene perception and trajectory prediction empowered entirely by passive thermal sensing technology, heralding a new era where autonomous navigation is both feasible and reliable irrespective of the time of day.

Ganesh, PB. Dhruval, J. Shalabi, S. Jape, X. Wang, and Z. Jacob, “Thermal Voyager: A Comparative Study of RGB and Thermal Cameras for Night-Time autonomous Navigation,” ICRA (2024)