Machine perception uses advanced sensors to collect information about the surrounding scene for situational awareness. State-of-the-art machine perception using active sonar, radar and LiDAR to enhance camera vision faces difficulties when the number of intelligent agents scales up. Exploiting omnipresent heat signal could be a new frontier for scalable perception.

Some of our key advancements and innovations in this area are categorized in the tiles given below. Click to know more!

Thermal Night Vision

Heat-Assisted Detection And Ranging (HADAR)

HADAR is our thermal imaging innovation that allows AI to see through pitch darkness like broad daylight. We propose and experimentally demonstrate heat-assisted detection and ranging (HADAR) overcoming the open challenge of ghosting and benchmark it against AI-enhanced thermal sensing. HADAR not only sees texture and depth through the darkness as if it were day but also perceives decluttered physical attributes beyond RGB or thermal vision, paving the way to fully passive and physics-aware machine perception. We develop HADAR estimation theory and address its photonic shot-noise limits depicting information-theoretic bounds to HADAR-based AI performance. HADAR ranging at night beats thermal ranging and shows an accuracy comparable with RGB stereovision in daylight. Our automated HADAR thermography reaches the Cramér–Rao bound on temperature accuracy, beating existing thermography techniques. Our work leads to a disruptive technology that can accelerate the Fourth Industrial Revolution (Industry 4.0) with HADAR-based autonomous navigation and human–robot social interactions.

This research was featured on the July 26 issue of the peer-reviewed journal Nature.

Why are thermal images blurry?

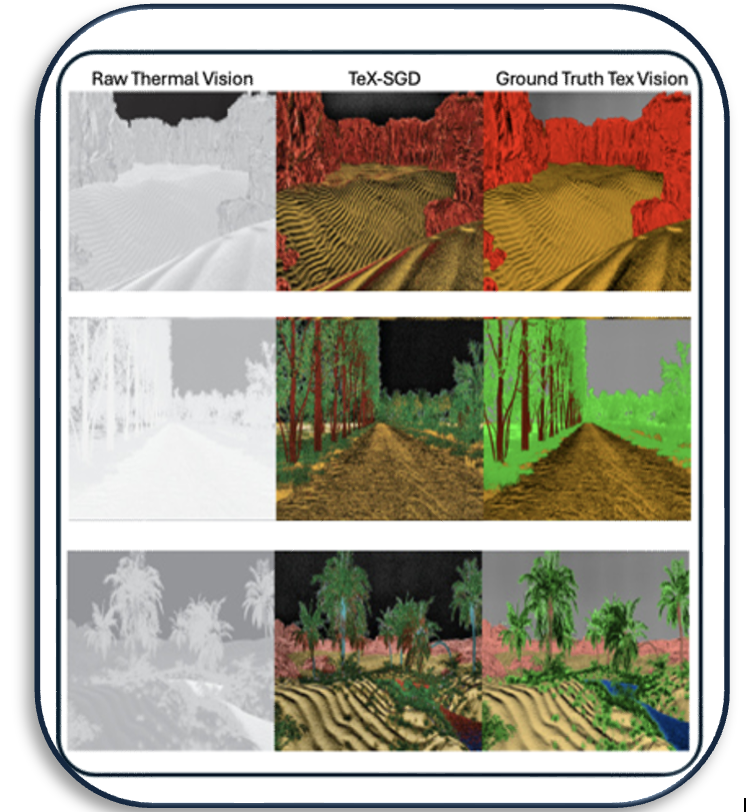

The resolution of optical imaging is limited by diffraction as well as detector noise. However, thermal imaging exhibits an additional unique phenomenon of ghosting which results in blurry and low-texture images. Here, we provide a detailed view of thermal physics-driven texture and explain why it vanishes in thermal images capturing heat radiation. We show that spectral resolution in thermal imagery can help recover this texture, and we provide algorithms to recover texture close to the ground truth. We develop a simulator for complex 3D scenes and discuss the interplay of geometric textures and non-uniform temperatures which is common in real-world thermal imaging. We demonstrate the failure of traditional thermal imaging to recover ground truth in multiple scenarios while our thermal perception approach successfully recovers geometric textures. Finally, we put forth an experimentally feasible infrared Bayer-filter approach to achieve thermal perception in pitch darkness as vivid as optical imagery in broad daylight.

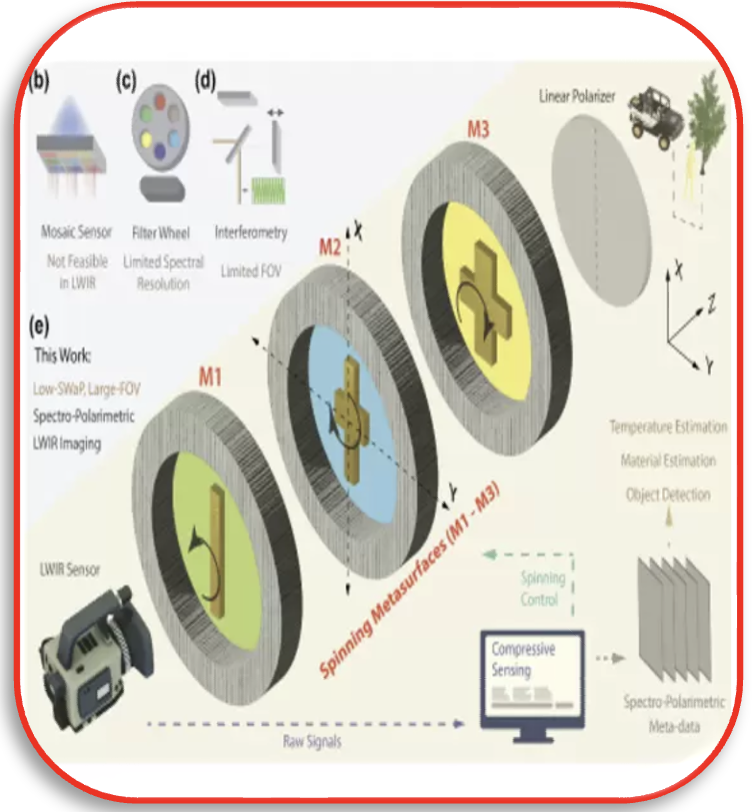

Computational Thermal Imaging using Metasurfaces

Spectro-polarimetric imaging in the long-wave infrared (LWIR) region plays a crucial role in applications from night vision and machine perception to trace gas sensing and thermography. However, the current generation of spectro-polarimetric LWIR imagers suffers from limitations in size, spectral resolution, and field of view (FOV). While meta-optics-based strategies for spectro-polarimetric imaging have been explored in the visible spectrum, their potential for thermal imaging remains largely unexplored. In this work, we introduce an approach for spectro-polarimetric decomposition by combining large-area stacked meta-optical devices with advanced computational imaging algorithms. The co-design of a stack of spinning dispersive metasurfaces along with compressive sensing and dictionary learning algorithms allows simultaneous spectral and polarimetric resolution without the need for bulky filter wheels or interferometers. Our spinning-metasurface-based spectro-polarimetric stack is compact (<10×10×10cm<10×10×10cm) and robust, and it offers a wide field of view (20.5°). We show that the spectral resolving power of our system substantially enhances performance in machine learning tasks such as material classification, a challenge for conventional panchromatic thermal cameras. Our approach represents a significant advance in the field of thermal imaging for a wide range of applications including heat-assisted detection and ranging (HADAR).

Thermal Voyager

Achieving reliable autonomous navigation during nighttime remains a substantial obstacle in the field of robotics. Although systems utilizing Light Detection and Ranging (LiDAR) and Radio Detection and Ranging (RADAR) enables environmental perception regardless of lighting conditions, they face significant challenges in environments with a high density of agents due to their dependence on active emissions. Cameras operating in the visible spectrum represent a quasi-passive alternative, yet they see a substantial drop in efficiency in low-light conditions, consequently hindering both scene perception and path planning. Here, we introduce a novel end-to-end navigation system, the "Thermal Voyager", which leverages infrared thermal vision to achieve true passive perception in autonomous entities. The system utilizes TrajNet to interpret thermal visual inputs to produce desired trajectories and employs a model predictive control strategy to determine the optimal steering angles needed to actualize those trajectories. We train the TrajNet utilizing a comprehensive video dataset incorporating visible and thermal footages alongside Controller Area Network (CAN) frames. We demonstrate that nighttime navigation facilitated by Long-Wave Infrared (LWIR) thermal cameras can rival the performance of daytime navigation systems using RGB cameras. Our work paves the way for scene perception and trajectory prediction empowered entirely by passive thermal sensing technology, heralding a new era where autonomous navigation is both feasible and reliable irrespective of the time of day.

Thermal Perception Simulator

The resolution of optical imaging is limited by diffraction as well as detector noise. However, thermal imaging exhibits an additional unique phenomenon of ghosting which results in blurry and low-texture images. Here, we provide a detailed view of thermal physics-driven texture and explain why it vanishes in thermal images capturing heat radiation. We show that spectral resolution in thermal imagery can help recover this texture, and we provide algorithms to recover texture close to the ground truth. We develop a simulator for complex 3D scenes and discuss the interplay of geometric textures and non-uniform temperatures which is common in real-world thermal imaging. We demonstrate the failure of traditional thermal imaging to recover ground truth in multiple scenarios while our thermal perception approach successfully recovers geometric textures. Finally, we put forth an experimentally feasible infrared Bayer-filter approach to achieve thermal perception in pitch darkness as vivid as optical imagery in broad daylight.